Media is another vital component of a Unified Communication system. Once signaling is in place and working between two endpoints, information about media capabilities can be transferred, eventually allowing for streaming audio, making video calls, or exchanging other information.

Media is another vital component of a Unified Communication system. Once signaling is in place and working between two endpoints, information about media capabilities can be transferred, eventually allowing for streaming audio, making video calls, or exchanging other information.

In this blog article we will analyze what technologies are used to transfer information about available media between endpoints.

SDP

SDP (Session Description Protocol) is a format for describing streaming media initialization parameters standardized by IETF in 1998.

What follows is the Session Description fields usage and an example:

Session description v= (protocol version number, currently only 0) o= (originator and session identifier : username, id, version number, network address) s= (session name : mandatory with at least one UTF-8-encoded character) i=* (session title or short information) u=* (URI of description) e=* (zero or more email address with optional name of contacts) p=* (zero or more phone number with optional name of contacts) c=* (connection information—not required if included in all media) b=* (zero or more bandwidth information lines) One or more Time descriptions ("t=" and "r=" lines; see below) z=* (time zone adjustments) k=* (encryption key) a=* (zero or more session attribute lines) Zero or more Media descriptions (each one starting by an "m=" line; see below)

Time description (mandatory) t= (time the session is active) r=* (zero or more repeat times)

Media description (if present) m= (media name and transport address) i=* (media title or information field) c=* (connection information — optional if included at session level) b=* (zero or more bandwidth information lines) k=* (encryption key) a=* (zero or more media attribute lines — overriding the Session attribute lines)

Below is a real example of SDP as used to describe an audio / video session using Mulaw for audio (codec 0) and h263 for video (dynamic payload 99), which is further specified using the attribute a=rtpmap:99 h263-1998/90000. Another attribute indicates that the session is recvonly (can only receive). The other endpoint should reply with a sendonly session in such a scenario. Attributes extend the capabilities of SDP by adding support for any new media and the exchange of information.

v=0 o=jdoe 2890844526 2890842807 IN IP4 10.47.16.5 s=SDP Seminar i=A Seminar on the session description protocol u=http://www.example.com/seminars/sdp.pdf e=j.doe@example.com (Jane Doe) c=IN IP4 224.2.17.12/127 t=2873397496 2873404696 a=recvonly m=audio 49170 RTP/AVP 0 m=video 51372 RTP/AVP 99 a=rtpmap:99 h263-1998/90000

We can see that the standard is text-based, and allows us to easily grasp which medias are involved in a session by reading the SDP itself.

In the example above, the client sending the SDP will be waiting for the following:

m=audio 49170 RTP/AVP 0

This entry refers to RTP/AVP audio g.711u (0 stands for G.711u and 1 G.711a) on port 49170.

m=video 51372 RTP/AVP 99 a=rtpmap:99 h263-1998/90000

This entry refers to video h263-1998 on port 51372. In this case, the payload value of 99 is dynamic and the second line (the media attribute line defines the exact content.

SDP is not able to actually transfer media. That task is delegated to protocols such as RTP or RTSP. As we have seen, SDP is completely “transport agnostic,” and can be transferred over protocols such as SIP, inside the HTTP payload, or even via emails.

RTP / RTCP

Once SDP headers have been exchanged between endpoints, the audio / video communication can take place over specific ports. RTP / RTCP can work over both UDP and TCP, although UDP is much more popular.

Media is exchanged by means of RTP (Real Time Protocol) packets.

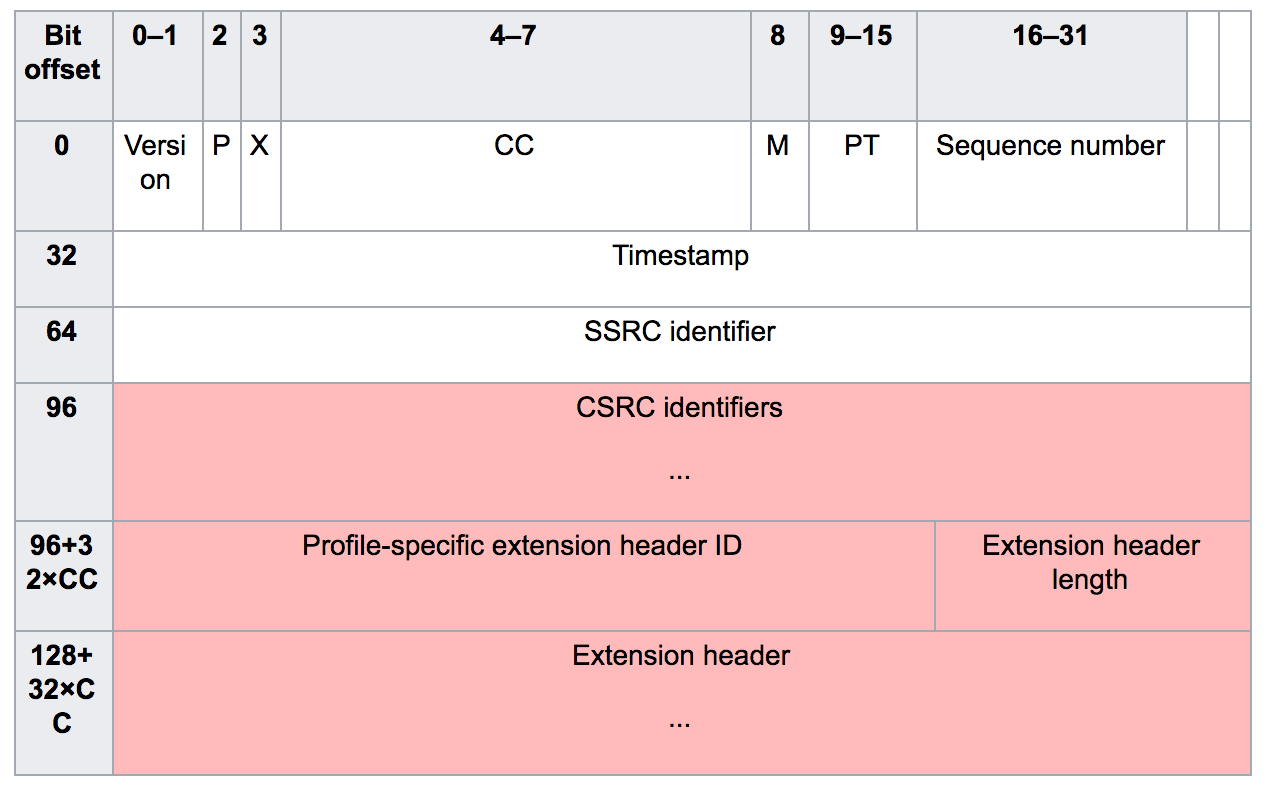

In the schema below, we see the headers used by the protocol:

When used for one-to-one sessions, fields like CC and CSRC are not going to be used. Let’s see how each single field inside the headers is used. It’s worth noting that headers can be empty or not present when we analyze real RTP sessions between endpoints:

- Version: (2 bits) Indicates the version of the protocol. Current version is 2.

- P (Padding): (1 bit) Used to indicate if there are extra padding bytes at the end of the RTP packet. Padding might be used to fill up a block of a certain size (for example, as required by an encryption algorithm). The last byte of the padding contains the number of padding bytes that were added (including itself).

- X (Extension): (1 bit) Indicates the presence of an Extension header between the standard header and payload data. It is application- or profile-specific.

- CC (CSRC count): (4 bits) Contains the number of CSRC identifiers (defined below) that follow the fixed header.

- M (Marker): (1 bit) Used at the application level and defined by a profile. If it is set, it means that the current data has some special relevance for the application.

- PT (Payload type): (7 bits) Indicates the format of the payload and determines its interpretation by the application. It is specified by an RTP profile. For example, see RTP Profile for audio and video conferences with minimal control (RFC 3551).

- Sequence number: (16 bits) The sequence number is incremented by one for each RTP data packet sent, and it is used by the receiver to detect packet loss and to restore the packet sequence. The RTP does not specify any action on packet loss; it is left to the application to take appropriate action. For example, video applications may play the last known frame in place of a missing frame. According to RFC 3550, the initial value of the sequence number should be random to make known-plaintext attacks on encryption more difficult. RTP provides no guarantee of delivery, but the presence of sequence numbers makes it possible to detect missing packets.

- Timestamp: (32 bits) Used to allow the receiver to play back the received samples at appropriate intervals. When several media streams are present, the timestamps are independent in each stream, and may not be relied upon for media synchronization. The granularity of the timing is application specific. For example, an audio application that samples data once every 125 µs (8 kHz, a common sample rate in digital telephony) would use that value as its clock resolution. The clock granularity is one of the details that is specified in the RTP profile for an application.

- SSRC: (32 bits) The synchronization source identifier uniquely identifies the source of a stream. The synchronization sources within the same RTP session will be unique.

- CSRC: (32 bits each) Contributing source IDs enumerate contributing sources to a stream which has been generated from multiple sources.

- Header extension: (optional) The first 32-bit word contains a profile-specific identifier (16 bits) and a length specifier (16 bits), which indicates the length of the extension (EHL = extension header length) in 32-bit units, excluding the 32 bits of the extension header.

The fields which are most important to correctly reconstruct and decode the stream are:

- SSRC (useful to match RTP streams collisions)

- Timestamp (needed to correctly play back the stream)

- Sequence Number (needed to reconstruct the stream in the presence of jitter during the communication

To be continued…

Information request:

Information request: